Master Retrieval-Augmented Generation—end ChatGPT hallucinations, access live data, and see why 80% of enterprises back this $40 billion AI upgrade with this guide

Nate

Imagine you meet someone brilliant—someone who seems to know absolutely everything. Every answer they give feels sharp, insightful, even groundbreaking. Now, picture this person having one fatal flaw: every so often, they confidently state something that’s totally wrong. Not just wrong, mind you, but spectacularly incorrect—like insisting that Abraham Lincoln was a professional skateboarder. Welcome to the current state of Large Language Models (LLMs).

As fascinating and powerful as AI systems like ChatGPT and Claude have become, they still possess what I affectionately (and sometimes frustratingly) call a “frozen brain problem.” Their knowledge is permanently stuck at their last training cutoff, causing them to occasionally hallucinate answers—AI jargon for confidently stating nonsense. In my more forgiving moments, I compare it to asking a very smart student to ace an exam without any notes: impressive, yes, but prone to error and entirely reliant on memory.

That’s where Retrieval-Augmented Generation, or RAG, enters the chat. RAG fundamentally reshapes what we thought possible from AI by handing these brilliant-but-flawed models a crucial upgrade: an external, dynamic memory. Imagine giving our hypothetical brilliant person access to an extensive, always-up-to-date digital library—now every answer can be checked, validated, and supported with actual data. It’s like turning that closed-book exam into an open-book test, enabling real-time, accurate, and trustworthy answers.

The stakes couldn’t be higher. We’re moving quickly into a future where businesses, hospitals, law firms, and schools increasingly rely on AI to handle complex information retrieval and decision-making tasks. According to recent market analyses, this isn’t a niche upgrade—it’s a seismic shift expected to catapult the RAG market from $1.96 billion in 2025 to over $40 billion by 2035. Companies who fail to embrace RAG risk becoming like video rental stores in the Netflix era: quaint, nostalgic, but rapidly obsolete.

I’ve spent considerable time sifting through the noise, experimenting, succeeding, and occasionally stumbling with RAG. This document you’re holding—or, more realistically, scrolling through—is the distilled result: a 53-page guide that’s comprehensive, nuanced, and occasionally humorous (I promise, there’s levity amidst the deep dives into cosine similarity and chunking strategies). Whether you’re a curious novice or a seasoned practitioner, there’s gold here for everyone.

Inside this guide, we’ll demystify exactly how RAG works—retrieval, embedding, generation, chunking, and all—using analogies clear enough for dinner party conversations and precise enough for your next team meeting. We’ll explore advanced techniques, including hybrid searches and multi-modal retrieval, to ensure you don’t just understand RAG—you master it. We’ll even examine some cautionary tales from companies who jumped in headfirst without checking the depth (spoiler: they regret it).

Why should you read this? Because memory matters. In AI, memory isn’t a nice-to-have feature; it’s the essential backbone that transforms impressive parlor tricks into reliable, transformative technology. If you’re investing in AI, building products, or even just navigating an AI-driven world, understanding RAG isn’t optional—it’s critical.

So, pour a coffee, settle in, and let’s tackle this together. You’re about to gain the keys to AI’s memory revolution, ensuring your AI doesn’t just sound brilliant but actually knows its stuff. Welcome to your next-level guide on Retrieval-Augmented Generation: AI’s long-awaited memory upgrade.

Subscribers get all these pieces!

Subscribed

From 0 to 5K: The Complete Simplified Guide to RAG (Retrieval-Augmented Generation)

=========================================================================================

Imagine if ChatGPT had perfect memory – never hallucinating, and able to tap your company’s entire knowledge base in real time. That’s the promise of Retrieval-Augmented Generation (RAG), and it’s changing everything about how we build with AI. In this guide, we’ll demystify RAG from the ground up, transforming complex concepts into an engaging, accessible journey for AI enthusiasts.

Why RAG Changes Everything

Picture this: you ask your AI assistant a question, and it instantly pulls up exactly the right facts from your company docs, giving a confident answer with references. No more “hallucinated” nonsense – just accurate, up-to-date info. This isn’t sci-fi; it’s RAG, and it’s big. Analysts project the RAG market to soar from about $1.96 billion in 2025 to over $40 billion by 2035. Companies are betting big on RAG because it tackles AI’s biggest weak spots (memory and truthfulness) head-on.

Did you know? LinkedIn applied RAG (with a knowledge graph twist) to their customer support and slashed median ticket resolution time by 28.6%. And they’re not alone. Roughly 80% of enterprises are now using RAG approaches (retrieval) over fine-tuning their models – a massive shift in strategy. Why? Because RAG gives AI real-time data access, and that’s gold. One survey found nearly 73% of companies are engaged with AI in some form , and providing those AI systems with current, relevant data is the new race. In other words, the companies winning in 2025 aren’t the ones with the biggest model – they’re the ones whose AI knows their business inside and out.

So buckle up. By the end of this guide, you’ll see why RAG is the hot topic (a $1.96B opportunity and growing ), how it’s delivering “wow moments” like LinkedIn’s support success, and why 73%+ of orgs are scrambling to give their AI a real-time knowledge upgrade. RAG changes everything by making AI both smart and knowledgeable – and today, we’ll show you how to go from 0 to RAG hero in an approachable, step-by-step narrative.

RAG Basics: Your AI Gets a Research Assistant

If a large language model (LLM) is like a brilliant student who studied everything up until 2023, then RAG gives that student a real-time library card. It’s like letting your AI take an open-book exam instead of relying on memory alone. How does that work? Think of RAG as giving your AI a research assistant: when asked a question, the AI can Retrieve relevant info from a knowledge source, Embed that info into a form it understands, and then Generate a final answer using both its built-in knowledge and the retrieved facts.

Analogy alert: LLMs are like students; RAG lets them bring notes. As one engineer quipped, “LLMs don’t know – they predict. Their memory is frozen. That’s where RAG changes the game. It’s like giving the model an open-book test: it still has to reason, but now it gets to reference something real”. In practice, that means when an LLM gets a query, a RAG system will first fetch relevant text (from your documents, websites, etc.), and supply those facts to the model so it can formulate a grounded answer. The magic three-part process is:

- Retrieval: Take the user’s question and search a knowledge source for relevant info (just like Googling or querying a database).

- Embedding: Behind the scenes, both the question and documents are converted into numerical embeddings – basically, turning words into vectors (imagine coordinates in a 1536-dimensional space for OpenAI’s ada-002 model ) so that semantic similarity can be computed.

- Generation: The LLM receives the question plus the retrieved context and generates a final answer, augmented by these real-time facts.

Traditional LLMs have a fixed knowledge cutoff and often bluff when asked something outside their training. RAG makes the LLM’s knowledge dynamic and verifiable. It’s the difference between a student taking a closed-book test (relying on possibly outdated memory) vs. an open-book test with the latest textbook in hand. For example, without RAG, an LLM is stuck with whatever it learned in training – ask it about something it never saw, and it might just make up an answer. With RAG, we first retrieve the latest relevant info and feed it in, so the model’s answer can cite real data.

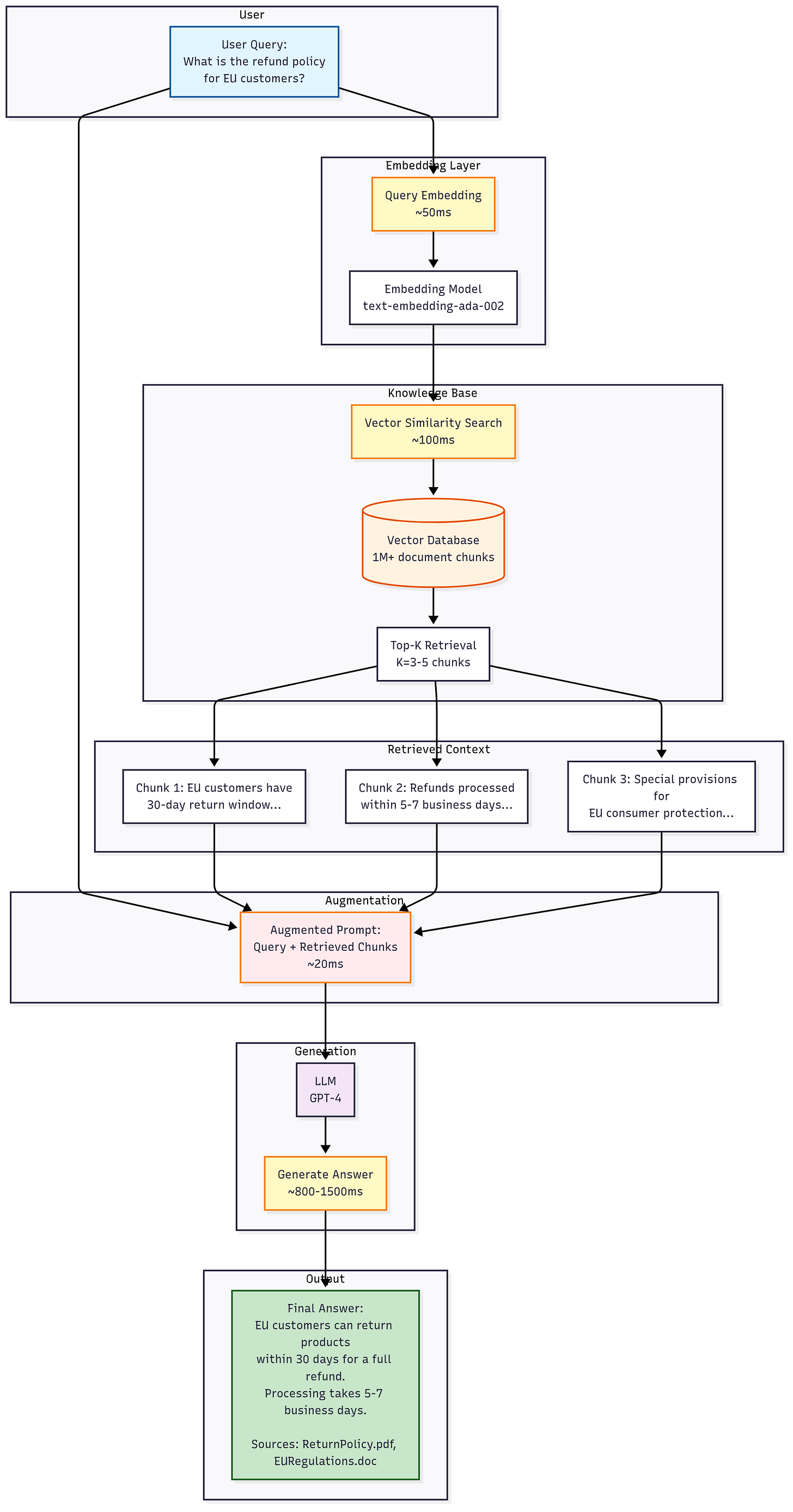

Here’s a simple diagram of a RAG workflow, which shows how a query flows through retrieval into generation:

Notice in the diagram: your AI isn’t just guessing from its frozen memory; it actively searches your knowledge base for context. This approach virtually eliminates those “sorry, I don’t have that info” dead-ends and dramatically reduces hallucinations. Users gain trust because the AI can show sources for its answers. In fact, RAG is often introduced specifically to boost factual accuracy and up-to-dateness. One AWS expert described a base LLM as an “over-enthusiastic new employee who refuses to stay informed” – RAG is how you get that employee to check the company wiki before answering !

But doesn’t adding retrieval make things slower? It’s a common concern that giving an LLM a “research step” will add too much latency. In reality, modern RAG systems are incredibly snappy. Vector search engines can fetch relevant chunks in tens of milliseconds, and overall RAG query times often land in the few-hundred-millisecond range. For instance, engineers report end-to-end RAG responses around 300–500 ms in practice – essentially real-time for most apps. Even complex multi-hop queries that pull lots of data might take a couple seconds at most. So while vanilla ChatGPT might answer in ~1–3 seconds, a well-tuned RAG might be 0.5–5 seconds depending on complexity. In conversational terms, that’s barely noticeable, and it’s a small trade-off for answers grounded in truth. (And with some clever caching and indexing, many RAG systems actually outpace humans hunting through documents – your support bot might answer in 500 ms what took a human agent 5 minutes.)

Bottom line: RAG gives your AI “retrieval superpowers.” Instead of being limited to what it memorized, it can search and cite fresh, relevant information on the fly. It’s like upgrading your genius student (LLM) with an always-available research librarian. In the next sections, we’ll dive deeper into how it works under the hood – but at its heart, RAG is the simple yet profound idea of augmenting generation with retrieval. It turns out this one idea addresses a lot of AI’s toughest challenges (hallucinations, stale knowledge, lack of trust). No wonder 73% of organizations are racing to implement AI with real-time data access – RAG makes AI not just smarter, but wisely informed. And that changes everything.

Under the Hood: How RAG Really Works

Let’s lift the hood on this RAG engine and see the mechanics in action. There are three technical concepts that make the RAG magic possible: embeddings (turning text into vectors), chunking (breaking text into retrievable pieces), and similarity search (finding which pieces are relevant). Don’t worry – we’ll break each concept down with simple analogies and visuals so it all clicks.

The Journey from Text to Vector

In RAG, your words aren’t just words – they’re coordinates in a high-dimensional space. When we say we “embed” text, imagine plotting meanings on a giant star map with 1,536 dimensions. For example, the phrase “customer refund policy” might become a vector like [0.23, -0.45, 0.67, …] (with 1,536 numbers). What do those numbers mean? Individually, not much to a human – but collectively they position the phrase in a semantic space where distance correlates with meaning. Two pieces of text that mean similar things will end up as vectors that are close together (small angle between them), even if they don’t share any keywords. This is why embedding is so powerful: similar meanings cluster together in vector space.

The state-of-the-art embedding model many use is OpenAI’s text-embedding-ada-002, which produces 1536-dimensional vectors and is remarkably good general-purpose. Ada-002 was a milestone because it collapsed multiple embedding tasks into one uber-model and made it cheap and easy via API. But it’s not the only game in town. Companies like Cohere offer embedding models (for instance, Cohere’s embed-english-v3 has its own dimensionality and strengths ), and open-source models like E5 or InstructorXL are now rivaling the proprietary ones. In fact, recent leaderboards (like MTEB) show tiny open-source models can come within a few percentage points of Ada’s accuracy. The takeaway: embedding models are evolving fast. Ada’s 1536-d vector was cutting-edge in 2022, but by 2025 we have specialized embeddings for images, code, multi-lingual data, etc., and some open models tuned for certain domains can outperform the general ones. The good news is that the concept is the same – whatever model you choose, it converts text into vectors such that meaningful similarity = mathematical closeness.

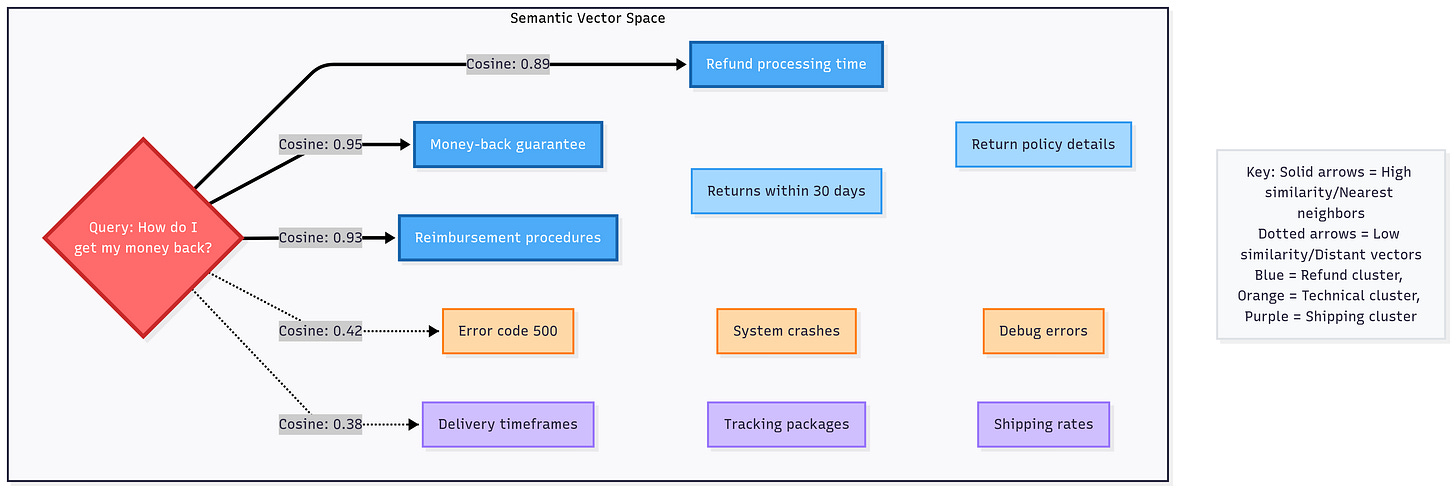

To visualize it, imagine each document chunk as a point in a cosmic galaxy. All chunks about refund policies cluster in one nebula; all chunks about technical errors cluster elsewhere. When a query comes in (“How do I process a refund?”), we embed the query into this same space and see which document points are nearest. Those nearest neighbors are likely talking about refunds too, even if they don’t share the exact wording of the question.

One mind-blowing fact: OpenAI’s 1536-dim embedding can capture incredibly nuanced meaning. For instance, it will place “Apple pay refund” close to “reimbursing customers via Apple Pay” even if the wording differs, because the core idea is the same. This semantic clustering is something old keyword search couldn’t do – it would miss synonyms or paraphrases – but embeddings nail it. It’s like magic: the model somehow knows that “NDA” and “non-disclosure agreement” are related, or that a Jaguar (animal) is different from Jaguar (car), based on context usage. Of course, the model doesn’t “know” in a human sense; it’s all statistical correlation from training. But the effect is a vector space where related ideas gravitate together.

Before we move on: you’ll often hear about cosine similarity vs. dot product vs. Euclidean distance as ways to measure vector closeness. Here’s a quick cheat sheet: cosine similarity cares only about the angle between vectors (essentially their orientation, ignoring magnitude). Dot product is like cosine but also scales with magnitude (two vectors in the same direction will register even more similar if they’re longer). Euclidean distance is the straight-line distance. Many systems use cosine or dot (with normalized embeddings, dot and cosine become equivalent). Conceptually, you can think: cosine = how aligned are the meanings, dot = aligned + confidence, Euclidean = literal distance considering all components. The basic rule is actually simple: use whatever the embedding model was trained with. Ada was trained with cosine, so use cosine for Ada vectors. Some newer models use dot product. As long as you match it up, you’re golden. We won’t belabor the math – just know these metrics exist, and cosine is popular because it neatly ignores differences in length and focuses on meaning direction.

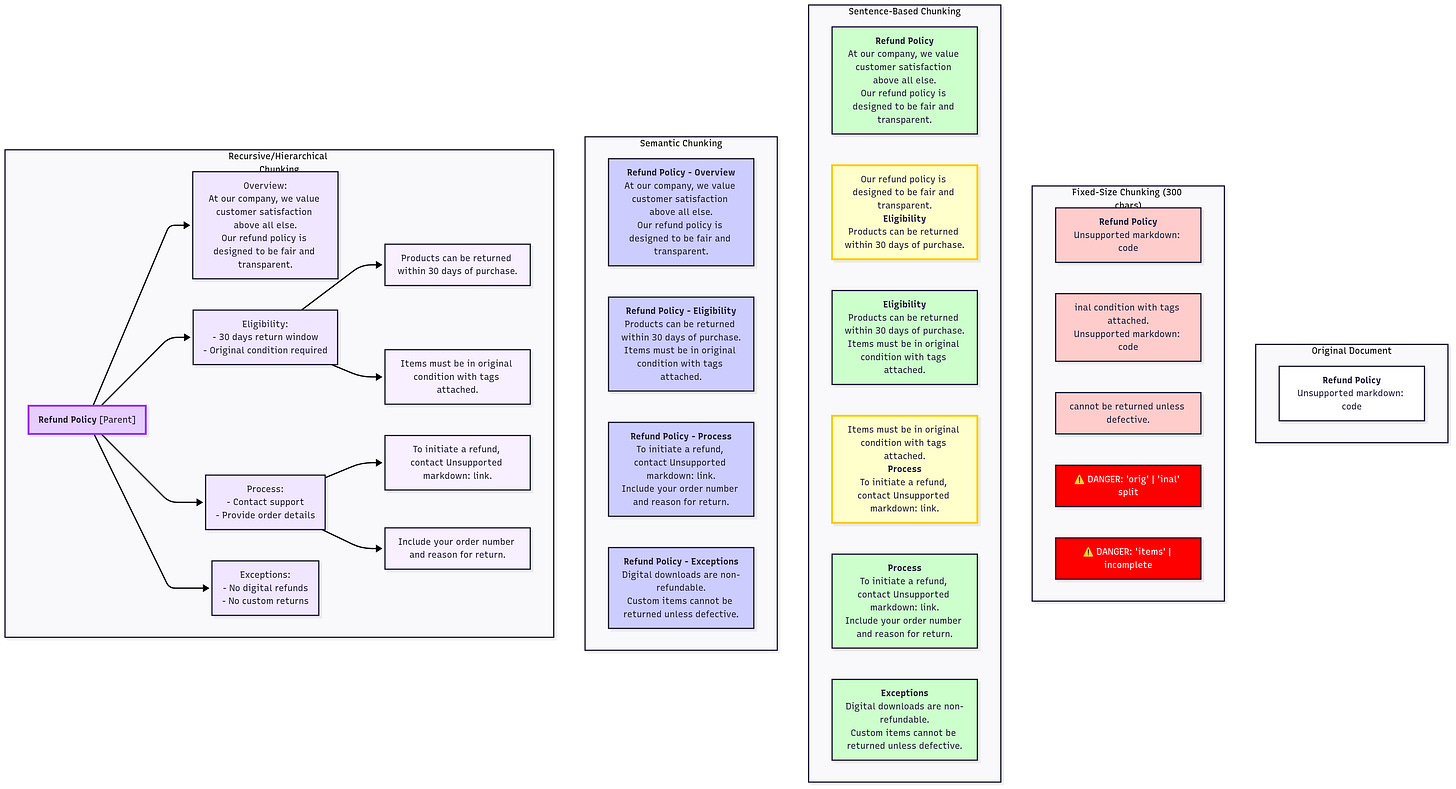

The Art and Science of Chunking

Now our text is embedding-ready – but wait, we can’t just embed a whole huge document in one go (context windows have limits). We need to chunk documents into pieces. Chunking is an unsung art in RAG. Do it wrong, and you “shred” context and lose meaning; do it right, and your retrieval is laser-precise. It’s said that bad chunking is responsible for sinking up to 40% of RAG projects – anecdotally, one expert looked at 10+ RAG implementations and found 80% had chunking that broke context. Ouch.

It’s an eye-chart, so I made a Notion where you can zoom in.

So what are the chunking strategies, and which actually work? Here are four you should know, from simplest (and most dangerous) to most advanced:

- Fixed-size chunking: Break text into equal-sized blocks (e.g. every 500 tokens). It’s easy, but often dangerous. It can cut off in the middle of topics – imagine a policy document where the chunk boundary splits a paragraph explaining a rule. The model might retrieve a chunk that says “Exceptions: none.” without the preceding chunk that explains the rule – misleading! Fixed windows (no matter 500 tokens or 1000) can break semantic units.

- Sentence-based chunking: Split by sentences or paragraphs, respecting natural boundaries. This is better for Q&A on prose, because each chunk is a self-contained thought. It’s commonly used in chat-style RAG: you ensure each chunk is, say, <= 200 tokens but you only cut at sentence ends. For conversational systems or FAQ docs, this often works well.

- Semantic chunking: The “smart approach” – use an algorithm to split text where topics change. For example, some tools use embeddings themselves to decide split points (looking for where similarity between consecutive paragraphs drops). Others use heading structure in documents to keep subtopics together. Semantic chunking tries to keep each chunk about one main idea. It’s like an automatic outline parser.

- Recursive chunking (hierarchical): When you have natural hierarchy (chapters → sections → subsections), you chunk at multiple levels. E.g., first chunk into sections, but if a section is too long, further chunk it into paragraphs. This preserves the tree structure. Recursive chunking ensures that if one chunk isn’t enough, you might retrieve multiple from the same section (because their content is related). It’s useful for things like books or multi-step instructions.

A huge tip from experience: overlap your chunks. By allowing, say, a 20% overlap between consecutive chunks, you ensure important context isn’t lost at boundaries. For instance, if chunk A ends with “The results are shown in Table 5” and chunk B begins with Table 5, an overlap would put the end of chunk A (“The results are shown in Table 5”) also at the start of chunk B. Then if a query hits that transition, you won’t miss it. Many practitioners recommend overlaps around 10–20% of chunk size. NVIDIA’s research found ~15% optimal for certain finance docs. The impact of overlap can be big: without it, you might get incomplete answers; with it, one study noted a significant boost in accuracy (some internal tests saw ~35% relative improvement when using overlapping chunks). The exact number isn’t magic, but some overlap is usually worth it.

Let’s illustrate chunking with a quick example. Suppose we have a 5-page HR policy PDF. Using fixed 300-word chunks, we’d just cut every 300 words – possibly slicing mid-paragraph. With sentence-based, we might end up with 20 sentence-long chunks (better). Semantic chunking might yield chunks like “Vacation Policy Overview” (chunk 1), “Accrual Rates” (chunk 2), “Carryover Rules” (chunk 3), etc., aligning with headings. That’s ideal for targeted Q&A: a question about carryover will likely retrieve the “Carryover Rules” chunk exactly. Recursive chunking would note that “Vacation Policy” is part of “Benefits Policies” and keep that association, so a higher-level query about benefits might retrieve multiple related chunks.

One more pro-tip: garbage in, garbage out. Clean your text before chunking. Remove irrelevant headers/footers, deduplicate content, and consider adding metadata (like section titles) to chunks. A chunk with metadata “Section: Return Policy” is far more informative to a retriever than a naked block of text. We’ll cover data prep in detail later, but chunking is the stage where a lot of that happens – splitting, labeling, and indexing the knowledge.

Why chunking matters: If you chunk wrong, your RAG system might retrieve the wrong pieces or miss the right ones entirely. It’s been called the silent killer of RAG projects. But with the four strategies above and a bit of overlap, you can avoid the common pitfalls. A 20% overlap can improve accuracy dramatically by ensuring context isn’t accidentally dropped. And focusing on semantic units keeps the signal-to-noise ratio high for the LLM, which it loves. Think of chunking like making bite-sized snacks for your AI – not too big to chew, and each with a clear flavor.

Similarity Search Demystified

We’ve embedded our chunks and query, and we have our chunks nicely defined – now comes the retrieval part: similarity search. This is where the vector database (or index) finds which chunks are most similar to the query vector. Let’s demystify it.

First, what does “nearest neighbor in 1536D space” even mean? A simple analogy: imagine each document chunk is a point on a map, but instead of latitude/longitude, we have 1536 coordinates. When you ask a question, you’re essentially dropping a pin in this 1536-D map, and saying “find me the closest points”. The nearest neighbors will be chunks that have high cosine similarity (small angle) with the query vector – i.e., they talk about the same thing. Crucially, this finds meaning, not just exact words. For example, if you ask “How do I reimburse a customer?”, the nearest neighbors might include chunks mentioning “refund process” or “issue a credit to the customer” even if the word “reimburse” isn’t there. Vector search ≠ keyword search – it’s searching by concept. This is why nearest neighbor in embedding space can feel like magic: it retrieves relevant info even when wording differs.

The common metrics for similarity we already touched on (cosine, dot, etc.). In practice, most vector databases let you choose one. The results – nearest neighbors – will be the same in terms of ranking if you use the one the model expects (cosine for normalized vectors, etc.). Cosine similarity is popular since it focuses purely on orientation (meaning). Dot product can be slightly more sensitive to frequency (longer text can have larger dot value even if semantically similar). Euclidean is less used for text embeddings but conceptually similar to dot for normalized vectors. The key is: the vector DB returns a list of top-K chunks and their similarity scores.

Now, the “re-ranking revolution”. Basic vector search is great, but researchers found you can push accuracy even higher by a second-stage rerank. One approach: retrieve, say, top 50 chunks by cosine, then use a more precise but slower model (like a cross-encoder or even the LLM itself) to rerank those 50 for true relevance. This two-tier system can take you from maybe 70% relevant results to 90%+. Why? The cross-encoder actually looks at the query and chunk together (like “Would this chunk answer that question?”) rather than just comparing embeddings. It’s more computationally expensive, hence only done on top-K candidates, but it substantially improves precision.

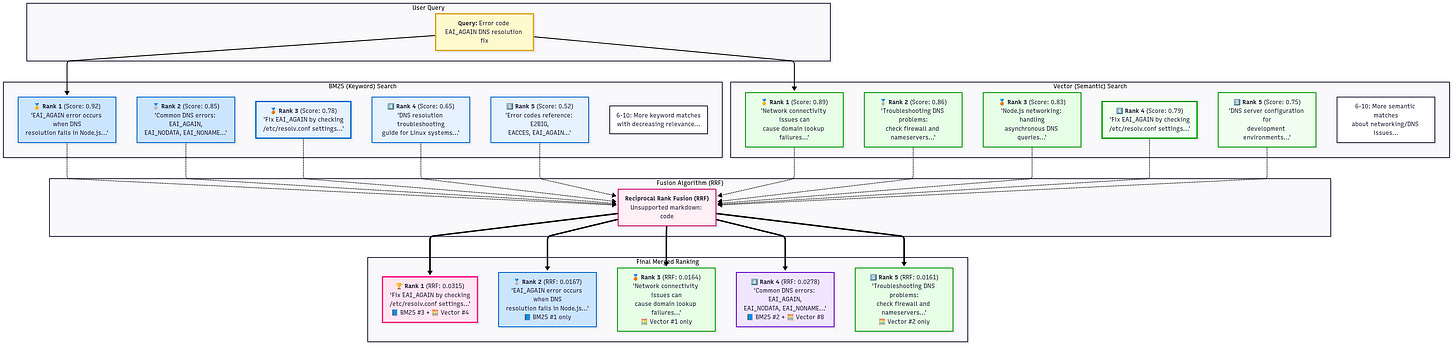

There’s also a simpler heuristic called Reciprocal Rank Fusion (RRF) which can merge results from different methods (like one from keyword search, one from vector) and boosts final accuracy. RRF essentially says “if a document is high on any list, boost it in the final rank”. It’s robust and often used in hybrid systems (which we’ll talk about soon).

For a visceral sense of similarity search, let’s do a quick “live” example in narrative form: User asks: “What is the warranty period for product X if purchased in Europe?” – The system embeds that query. It then computes similarity against thousands of chunks: Chunk 1: “…the standard warranty is 1 year in the US and 2 years in the EU…” – similarity 0.95 (very high, because it directly addresses warranty in EU). Chunk 2: “…product X comes with a limited warranty covering defects for 24 months in Europe…” – similarity 0.93 (also relevant wording). Chunk 3: “…return policy for product X is 30 days…” – similarity 0.5 (not very related, it’s about returns vs warranty). The system would retrieve chunks 1 and 2 as top hits. If we only looked for the keyword “warranty”, we’d have found them too perhaps – but consider if the query was phrased as “How long is support provided…?” and the doc said “24-month limited warranty”. A pure keyword might miss that (no literal “support” word), but the embedding knows “support period” is semantically near “warranty period” and still pulls it. That’s the power of nearest neighbor search in high dimensions – it finds meaning, not just matching terms.

One more modern twist: Hybrid search, combining sparse (keyword) and dense (vector) searches. This can catch edge cases where one method alone might fail. For example, exact codes or names (like error code “GAN-404”) are best found via keyword, while conceptual questions prefer vector. In a hybrid setup, you do both and merge results (maybe via RRF as mentioned). This often yields the best of both: semantic breadth and lexical precision. We’ll cover hybrid more in Advanced Patterns, but keep in mind: vector search gets you 80-90% there; adding a sprinkle of keyword search and re-ranking can push accuracy to the 95% range. In fact, our upcoming case study will show an AI that went from below 60% accuracy to 94-95% by smart retrieval and agentic steps – it’s not hype, it’s achievable with these techniques.

To summarize this “similarity search” stage: The query’s embedding is matched to chunk embeddings via a similarity metric. The nearest chunks (those with highest cosine or dot) are fetched as relevant context. Because of embeddings, this finds relevant info even when phrasing differs – the AI is truly understanding the intent in vector form. And by layering in re-ranking or hybrid methods, you ensure the most relevant bits bubble up (even nailing tricky queries that a single method might fumble). It’s the secret sauce taking retrieval precision from good (~70%) to great (90%+). All of this happens in a blink of an eye (millisecond-scale for vector math, maybe a couple hundred ms if re-ranking with a smaller model). The result: your LLM gets a tidy packet of top-notch information to work with. Next, we’ll see how we go from those retrieved chunks to a full answer – and how you can build this whole pipeline yourself, step by step.

The Technical Journey: From Zero to Hero

Alright, time to roll up our sleeves and get practical. How do you go from zero (no RAG at all) to a hero-level implementation? We’ll walk through building a simple RAG pipeline in minutes, then explore the rich ecosystem of tools and stacks available, and finally outline the 5 levels of RAG mastery you can aspire to. Don’t worry if you’re not a coding wizard – we’ll keep it approachable. By the end, you might just yell “It’s alive!” as your first RAG system comes to life.

Starting Simple (The 10-minute RAG)

Can we build a basic RAG app in a few lines of code? Yes. Thanks to high-level frameworks like LlamaIndex and LangChain, a minimal example is surprisingly short. Here’s a tiny RAG setup using LlamaIndex (formerly GPT Index) that can load documents, create a vector index, and answer queries:

# Your first RAG in ~15 lines of code

from langchain import SimpleDirectoryReader, VectorStoreIndex

# 1. Load your data (all files in "your_data" folder)

docs = SimpleDirectoryReader("your_data").load_data()

# 2. Create a vector index from documents

index = VectorStoreIndex.from_documents(docs)

# 3. Query the index with a question

response = index.query("Your question here")

print(response)

That’s it! This example uses langchain and its integration of LlamaIndex. In step 1, it reads documents from a directory (using an out-of-the-box reader that handles text files). Step 2 creates an index – under the hood it’s embedding those docs (likely with OpenAI’s Ada model by default) and storing vectors in a simple vector store. Step 3 sends a query; the library does the embedding of the query, similarity search, and calls an LLM to generate an answer, returning a nice response object (which we print). With those few lines, you’ve built a basic doc-QA bot. Congrats! You just built your first RAG system. 🎉 It’s pretty much “batteries included.” Of course, in a real app you’d add your API keys, maybe use ServiceContext to specify which LLM to use (GPT-4, etc.), but the core flow remains that simple.

This toy example can be run on a small set of text files. If you had a folder of policies or FAQs, it would work out of the box. The answer might look like: “The warranty period is 2 years for EU purchases【source.pdf】.” (Yes, these frameworks even return source citations automatically in many cases!). Now, this simplicity is great for a prototype, but as you scale up, you’ll want to make choices about your stack.

Choosing Your Stack (with personality-driven comparisons)

There’s an ever-growing landscape of RAG tooling. Let’s talk about a few popular ones in a fun way – imagine them as characters:

- LangChain: “The Swiss Army knife” – LangChain is the generalist that can do everything (sometimes too much!). It’s a framework with chains, agents, memory, integrations… you name it. Need to plug in a vector DB, call an API, parse output – LangChain has a module. This is great for complex apps that do more than just retrieval (like multi-step reasoning). But the flip side is it can feel heavy or overly abstract for simple RAG. You’ll sometimes hear that LangChain is too broad – it’s like a Swiss Army knife with 50 attachments; fantastic, but you might only need 3 of them.

- LlamaIndex: “The specialist” – LlamaIndex (GPT Index) is laser-focused on RAG. It shines in indexing and querying data with LLMs. If you “want RAG done right” out of the box, LlamaIndex is a great start. It handles chunking strategies, embeddings, and even has neat tricks like Query Transformers and structured retrieval. It’s not trying to orchestrate arbitrary tool use or agents – it’s specifically the RAG specialist. Many find LlamaIndex simpler for pure QA use cases, whereas LangChain is maybe better if you need to, say, do a RAG then an external calculation then chain another LLM call (i.e., more complex chain logic).

(Reality: LangChain and LlamaIndex often work together – you can use LlamaIndex as a retriever in LangChain – but painting them as distinct personas helps clarify their emphases.) According to one StackOverflow summary: “You’ll be fine with just LangChain, however LlamaIndex is optimized for indexing and retrieving data.”. LangChain is like the big toolkit; LlamaIndex is the refined instrument for data-LLM integration.

Now, beyond those, you have alternatives: Haystack (an open-source framework from deepset) which is like an enterprise-ready QA system toolkit, and various proprietary solutions (Azure Cognitive Search, etc.). But LangChain and LlamaIndex have huge communities right now. Use LangChain if you need that Swiss Army flexibility (chains, agents, lots of integrations). Use LlamaIndex if your focus is “feed these docs to an LLM and get answers” and you want it quick with sensible defaults. In practice, many start with LlamaIndex for a pilot, and as they add more complex flows, they might incorporate LangChain components.

Next, let’s compare vector databases with a similar personality flair:

- Pinecone: “The reliable pro, but pricey” – Pinecone is a cloud vector DB that’s fully managed and very easy to use. It’s known for high performance and reliability at scale. But like a seasoned pro, it comes with a price tag. Their Starter tier is free (up to ~300K vectors), but beyond that, a standard 50K vector index costs ~$70/month , and pricing scales up with volume and QPS. Pinecone is great when you don’t want to worry about infrastructure and you have the budget for quality service. (Think: the BMW of vector DBs – smooth ride, premium features, but you pay for it.)

- Chroma: “The free spirit” – Chroma is open-source and you can self-host it for free. It’s super easy to get started (pip install chromadb and you have a local DB in minutes). It’s not as battle-tested for massive scale as Pinecone, but it’s improving fast. If you’re a startup or hobbyist (or just cost-conscious), Chroma = $0 (self-hosted) and often that’s enough for quite large projects. It’s like the trusty open-source toolkit – freedom and flexibility, though you might have to get your hands a bit dirty on scaling.

- Qdrant: “The budget-friendly workhorse” – Qdrant is another open-source vector DB that also offers a cloud service. It’s known for being efficient and having a friendly pricing model. One comparison found Qdrant’s cloud estimated around $9/month for 50K vectors (versus Pinecone’s $70). So Qdrant is like the solid, economical choice – maybe not as fancy as Pinecone, but gets the job done and keeps costs low. Performance-wise, Qdrant is quite good; it uses HNSW under the hood like many others, and can handle millions of vectors too.

Other names include Weaviate (feature-rich, hybrid search support), Milvus (from Zilliz, high-performance, but heavier to manage). An insightful benchmark summarized: For 50K vectors, Qdrant’s ~$9 is hard to beat, Weaviate ~$25, Pinecone ~$70. Also Pinecone isn’t open-source (fully managed only), whereas Qdrant, Weaviate, Chroma are open or offer OSS versions.

In short: Pinecone if you value turnkey service and can pay; Chroma if you want free and local; Qdrant if you want cheap cloud with solid performance. There’s no one-size-fits-all – it depends on your needs (privacy? scale? budget?). Many prototyping with Chroma or LlamaIndex’s in-memory store, then move to Pinecone or Qdrant for production.

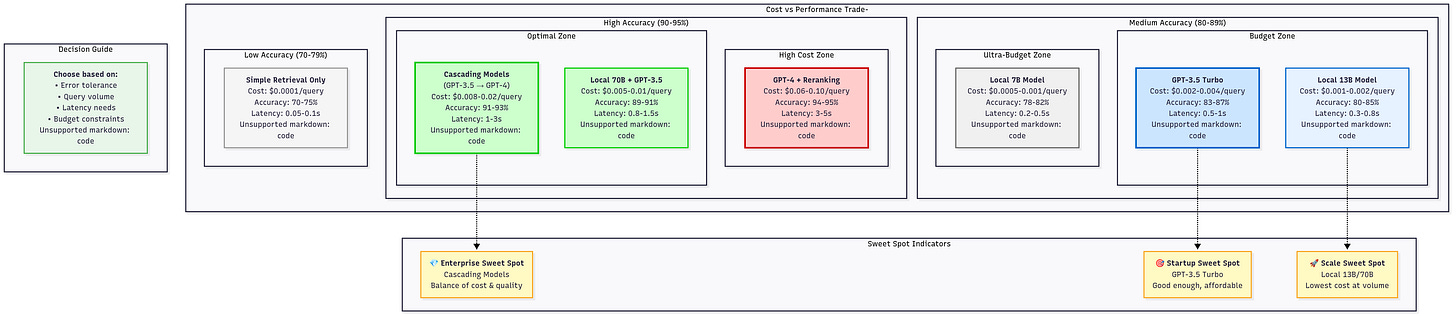

Finally, consider the LLM for generation. If using OpenAI, GPT-4 gives best quality but at higher cost/latency; GPT-3.5 is faster/cheaper but may hallucinate more if the retrieved context isn’t obviously relevant. There’s also Cohere, Anthropic, and open models (like LLaMA 70B via API or self-hosted). Using a powerful model for final answer is important for quality, but you can often get away with a smaller model if your retrieval is very on-point (because then the model’s job is easier – just summarize or lightly rephrase the facts in context).

This naturally leads to the idea of cascading models to optimize cost, which advanced users do (e.g., try answering with a cheap model first, and only if unsure, call GPT-4). We’ll revisit that in Enterprise tips. But for now, let’s outline the stages of RAG mastery you can progress through.

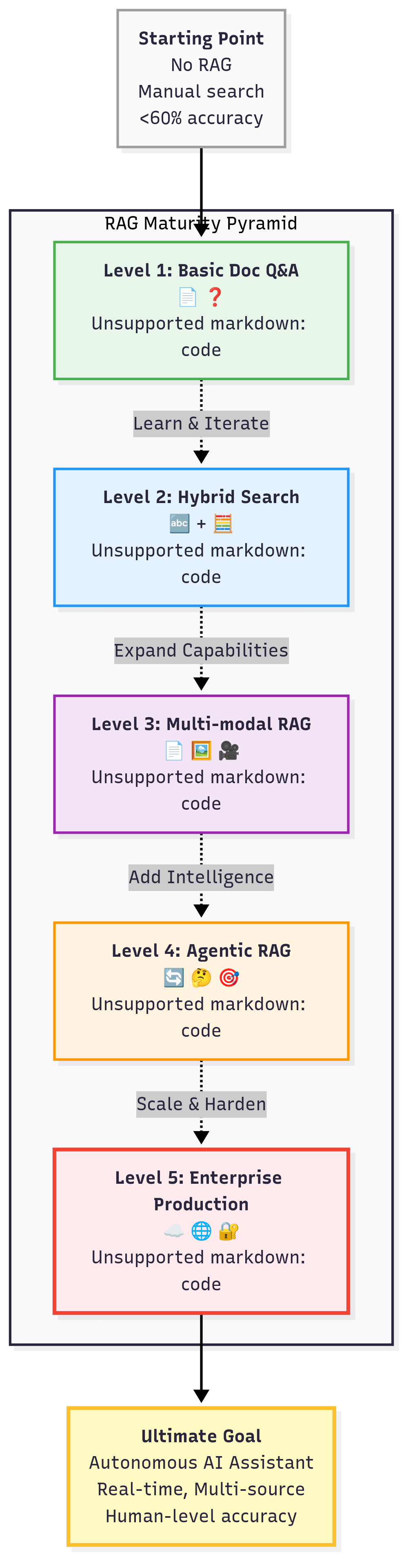

The 5 Levels of RAG Mastery

- Basic RAG – Simple Document Q&A: Level 1: You can feed documents and get answers. This is where you likely are after writing those 15 lines above. It handles questions like “What is our refund policy for EU customers?” by retrieving a snippet from your policy docs and answering. The system uses one strategy (vector search) and one data source. At this level, you might occasionally get irrelevant context if the query is ambiguous, but generally it works for straightforward Q&A on your content.

- Hybrid Search – Combining Semantic + Keyword: Level 2: You enhance retrieval by using both vector similarity and traditional keyword (BM25) search. Why? Because certain queries need exact matches (e.g., codes, proper nouns) that vectors might miss. By combining results from both and merging (perhaps via that RRF method ), you cover both the “fuzzy meaning” and “exact token” bases. The result: higher accuracy and robustness, especially for edge cases. At this level your system can handle things like “error code 500 out-of-memory” (which needs exact code match) and “OOM error” (which a vector might link to the same thing). You’re mitigating the recall issues of vector or sparse alone.

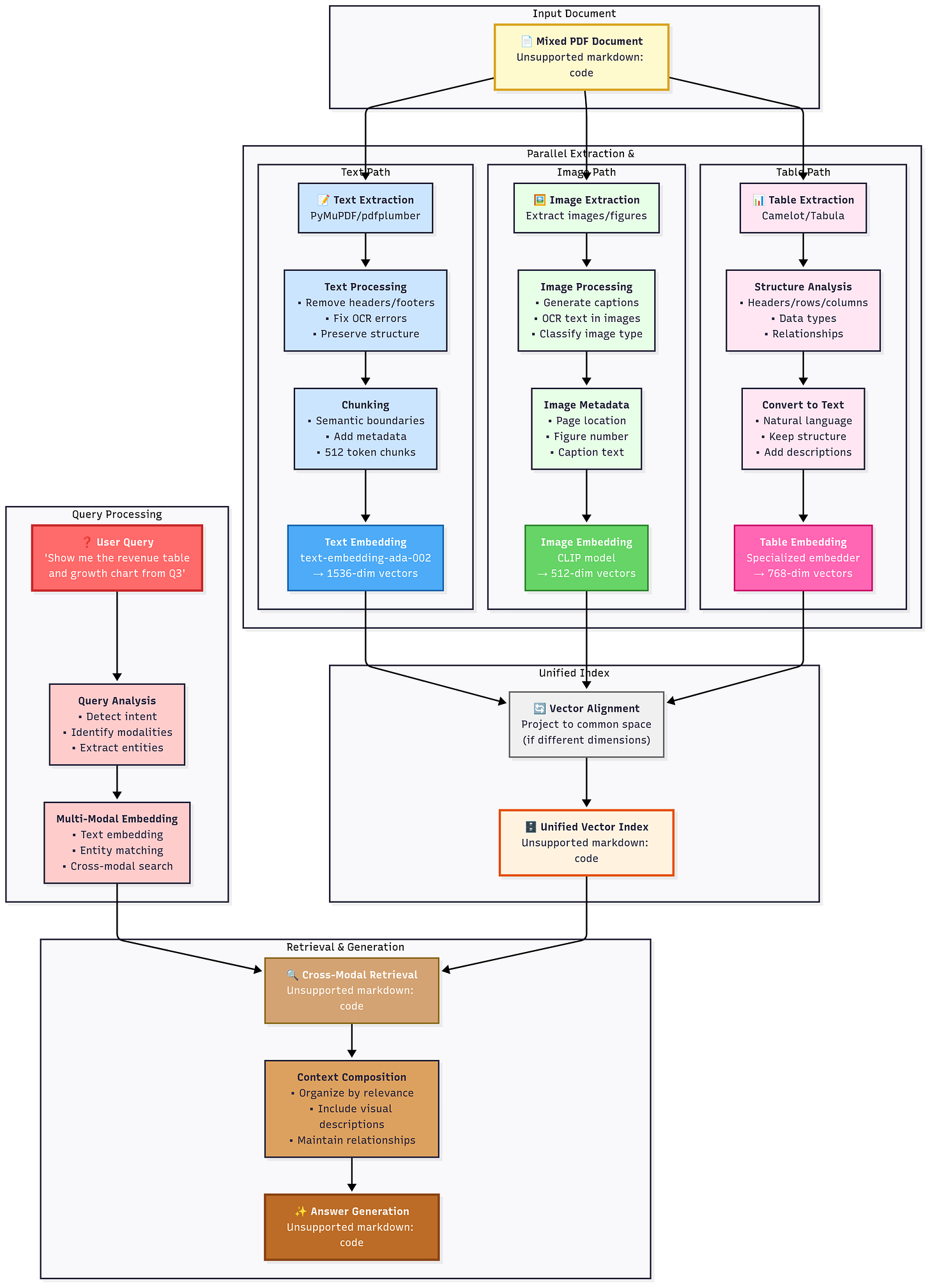

- Multi-modal RAG – Text, Images, and Beyond: Level 3: Now your “documents” aren’t just text – they could be images, audio transcripts, even video. Multi-modal RAG means retrieving across different data types. For example, Vimeo’s support might use RAG to search not just their text docs but also transcripted tutorials or even the content of videos (via image captions or OCR). Another scenario: in healthcare, a RAG system might pull a relevant medical diagram along with text. Technically, this involves embedding other modalities (e.g., using CLIP for images to get vectors). By mastering multi-modal RAG, your AI could answer a question like “What does the workflow diagram look like for process X?” by retrieving an image of that diagram (converted to an embedding) plus some explanation text. It opens up a new world of use cases – chat about your PDFs and your slide decks and your videos.

- Agentic RAG – Self-improving Systems with Reasoning: Level 4: Here we blend RAG with agent-like behavior. Instead of a single retrieval step, the AI can iteratively plan and retrieve, or use tools, to answer more complex queries. For example, an agentic RAG might break down a tough question into sub-questions, retrieve answers for each, and then compose a final answer. It can also decide to do follow-up retrieval if the initial info wasn’t sufficient – essentially a loop where the LLM says “Let me dig deeper on XYZ” and performs another retrieval. This level often uses frameworks like LangChain agents or the ReAct pattern (LLM reasoning with retrieval actions). The system not only fetches facts, but can chain them or perform calculations, etc. It’s “open-book exam + reasoning”. One cool example: an agentic RAG might take a customer query, retrieve some knowledge base articles, then notice it needs the latest sales figure, call an API to get that, and then answer – all dynamically. It’s more complex but can tackle multi-step tasks and even self-correct if initial info was misleading. This is where “AI assistants” live, going beyond pure Q&A.

- Production RAG – Enterprise-scale with Millisecond Latency: Level 5: The final boss level. Your RAG system serves thousands or millions of users, with perhaps 10 million+ queries a day, all under tight latency requirements (say <100ms for search). You’ve deployed indexes with millions of chunks, sharded across servers. Caching is employed (maybe an in-memory cache for popular queries), and you monitor latency percentiles. This is where search engine tech meets RAG. Systems like Bing (with Sydney), or Google’s search augmentation, or enterprise digital assistants fall here. You’ve mastered vector index scaling, index updating without downtime, and cost optimization (like using a cheaper model for 95% of queries and only using GPT-4 for the hardest ones to save money). Also, production-ready means robust evaluation and fallback – you likely integrate feedback loops where if the AI is not confident, it might gracefully decline or escalate. It also involves security – ensuring no data leakage, compliance with things like HIPAA/GDPR if applicable. At Level 5, you are building RAG with the rigor of a mission-critical system. The payoff: users get instant, accurate answers at scale, and your company’s collective knowledge truly becomes an “AI brain” that anyone can tap.

Think of these levels as cumulative. Each builds on the previous. By the time you’re at Level 5, you’ve incorporated hybrid search, maybe multi-modal sources, perhaps some reasoning, and you’ve industrialized it. But don’t be daunted – you can get a lot of value at Level 1 and 2 already. Many internal tools live around Level 2 or 3: e.g., a chatbot that answers from company docs (text-only) with some hybrid search is Level 2. That alone can reduce support tickets or onboard employees faster. The fancy stuff like agents and multi-modal come into play for advanced applications (like an AI that can troubleshoot software by reading logs and viewing system graphs – text + metrics + images, with reasoning).

Next, we’ll delve into how to get your data ready for these levels. Because even the fanciest RAG pipeline fails if fed garbage data. It’s like having a brilliant chef but giving them rotten ingredients – the dish won’t turn out well. So, let’s talk data prep!

The Data Preparation Pipeline: Garbage In, Genius Out

You’ve heard it a million times: “garbage in, garbage out.” Nowhere is this more true than in RAG. The smartest retrieval and LLM won’t help if your documents are a mess – imagine PDFs with broken text, irrelevant boilerplate, or missing context. In this section, we’ll cover how to turn raw data into RAG-ready gold. From parsing gnarly file formats to enriching with metadata, consider this your pre-flight checklist before launching your AI.

Document Parsing Secrets: Your data likely isn’t a neat collection of.txt files. You’ll have PDFs, Word docs, HTML pages, maybe spreadsheets. The first step is parsing them into plain text (or structured text). Each format has its quirks:

- PDFs: Use reliable PDF parsers (like PyMuPDF/fitz or pdfplumber in Python). Extract text but beware – PDFs often have headers/footers on every page, line breaks in weird places, etc. A secret: many PDFs are basically scanned images (like that one cursed legacy contract). For those, you’ll need OCR (optical character recognition) to get text. Tools like Tesseract or AWS Textract can OCR images in PDFs. Also, watch out for multi-column layouts (scientific papers) – a naive parser might read across columns mixing content. Some libraries can detect columns or you might split by page and handle manually.

- Word Docs (.docx): These are easier – use python-docx or LibreOffice command line to convert to text. Most formatting (bold, etc.) we don’t need, but we want to preserve structure like headings. A good strategy: extract text and also output something like “## Heading: [Heading Text]” lines so you know what was a heading.

- HTML/Markdown: Likely documentation or web pages. Stripping HTML tags is step one (BeautifulSoup can help). But preserving some structure (like lists, tables) is useful. You might convert HTML to markdown, which keeps bullet points and links in a readable way. Be careful to remove navigation menus, ads, etc., that aren’t part of main content (there are boilerplate removal tools for HTML).

- Excel/CSV: If you have tabular data that’s relevant (maybe product price lists or error code tables), you can either embed those as text (e.g., convert small tables to text lists) or handle them specially (some RAG systems store small tables as structured data and let the LLM access them via a “tool”). But often, converting each row into a sentence works (e.g., row with Product X – Warranty 2 years becomes “Product X has a warranty period of 2 years.” for embedding).

Essentially, use the right parser for each format, and verify the output. You don’t want chunks full of gibberish because the parser mis-ordered the text. A quick manual skim of parsed output for a few files can save headaches.

Metadata Magic: Once you have the content, add metadata! Metadata is additional info about each chunk – like source filename, document title, author, timestamp, section, etc. Why? It can 3× your accuracy in retrieval and even generation. For example, if each chunk knows it came from “FAQ.doc – Section: Pricing”, the retriever can use that in relevance scoring (some vector DBs allow filtering or weighted fields). The LLM can also be instructed to cite the source or use section info to format answer. In an evaluation at one company, adding metadata like document category boosted relevant retrieval by a huge margin (one anecdote: +35% hit rate on correct doc). At minimum, store: source name, and if applicable section headings and dates. Dates are important for time-sensitive info – e.g., “Policy updated March 2023”. You can store that so if a query asks “what’s the latest policy”, you might prefer newer chunks.

A neat trick: use metadata to filter. If your system supports it, you can tag chunks by type (e.g., “internal” vs “customer-facing”). Then if you build a chatbot for customers, you filter out internal-only docs entirely. This avoids embarrassing mistakes (like the AI revealing an internal memo because it was in the index).

Cleaning Strategies that Work: Before embedding, you want your text clean and useful:

- Intelligent removal of headers/footers: Many docs have repeated boilerplate (company name, page numbers, legal footers). These can pollute retrieval – e.g., you don’t want a chunk of mostly footer text (“ACME Corp Confidential – Page 5 of 10”) to be retrieved. You can detect these by frequency (if a line appears in every page, drop it), or specific cues (if it matches regex like “Page \d of \d” or has the company name in ALLCAPS over and over). Removing or reducing this boilerplate improves the signal.

- Handle tables and lists carefully: If a PDF parser outputs tables in a jumbled way (e.g., row data doesn’t line up), consider post-processing it. Sometimes it’s better to manually parse important tables (or use a CSV export from the source). For lists, keep the bullets or numbering if possible – it gives structure. For example, an answer might list the 3 steps of a process; if your chunk preserved “1. Do X 2. Do Y 3. Do Z” as separate lines, the LLM can more cleanly produce a numbered answer.

- Preserve context while removing noise: This is key. You want to trim the junk but not accidentally trim meaningful context. For instance, if a heading says “4.2 Refund Process” and the next page starts mid-sentence because of a page break, ensure the text is contiguous. One strategy is join text from page to page if there’s an obvious cut. Another is to include the heading text as metadata or inline (like add a line “Refund Process:” before the paragraph text). That way the chunk is self-contained contextually.

- Normalize text: fix OCR errors (common ones like “O” vs “0” or “rn” vs “m”). Also, unify things like whitespace, remove weird characters. If the data has a lot of unicode bullets or emojis not relevant, strip them. Consistency in text will help embeddings not get confused by artifacts.

- Language and encoding: If you have multilingual docs, note language in metadata. Remove any Unicode BOMs or encoding issues (most libraries handle UTF-8 fine nowadays, but just be cautious if any documents are in different languages/scripts – test a bit).

Think of cleaning like preparing a training dataset – a bit of time here yields a much smarter system. There’s a story of a startup spending weeks debugging why their RAG answers were off, only to realize the PDF parser scrambled columns so text read like word salad. A quick fix in parsing and accuracy jumped. So, invest time here.

The preprocessing checklist: Every document should ideally go through these 10 steps:

- Convert to text: (using appropriate parser for PDF, docx, etc.)

- Split into paragraphs/sections: (don’t chunk yet, just logical sections)

- Remove boilerplate: (headers, footers, legalese not needed)

- Normalize whitespace and punctuation: (clean newlines, fix broken hyphenations where a word is split at line break)

- Extract or insert section titles as needed: (to give context to each part)

- Add metadata: (filename, section, date, etc.)

- Chunk into pieces with overlap: (apply your chunk strategy here on the cleaned content)

- Embed chunks and store in vector DB: (and store metadata alongside)

- Verify sample chunks: (manually check a few: “Does this chunk make sense on its own? Does it have necessary context?”)

- Iterate if needed: (if something looked off, tweak parsing or chunking and re-run for that doc).

For a “messy financial report” example: say you have a 100-page annual report PDF with financial tables and text. The steps would be: parse text, detect that every page has a footer “Company – Confidential” and remove that line everywhere. Join lines that got broken in half by page breaks. For tables, maybe you notice they came out misaligned – you might manually copy the table as CSV, or at least ensure each table row stays in a chunk so context isn’t lost. Add metadata like “section: Balance Sheet” for the section where the table is. Then chunk maybe by sub-sections or 512-token blocks with overlap, ensuring not to cut mid-table. The end result: a set of chunks like “Balance Sheet: …assets…liabilities…” with perhaps the table values listed neatly. Now when a question asks “What were the total assets in 2024?”, the chunk with that info is retrievable and the model can answer accurately.

Remember, an hour spent cleaning data can save dozens of hours troubleshooting weird AI outputs later. When the AI gives a wrong or odd answer, 9 times out of 10 the issue can be traced back to missing or poorly formatted context in the chunks. Garbage in, garbage out is a law; but with clean, well-chunked data in, you get genius out.

Memory Magic: Short-term vs Long-term

One thing people often ask is, “If RAG gives the LLM external info, do we even need the LLM’s own memory?” Also, how do we handle multi-turn conversations – can the AI “remember” what was said earlier? This is where memory comes in, and in RAG we deal with two kinds: short-term (conversation context) and long-term (persistent knowledge). Let’s explore how to give your AI a memory like an elephant (when needed), without blowing the context window.

The “conversation amnesia” problem: If you’ve used ChatGPT, you know it can carry on a conversation remembering what you said earlier – up to a limit. That limit is the context window (e.g., ~4K or 8K tokens for GPT-3.5, 32K or more for GPT-4). In a chat setting, the model doesn’t truly remember anything beyond what’s in the prompt each turn. If the convo exceeds the window, it “forgets” the earliest parts unless we do something. This is conversation short-term memory issue. In a RAG chatbot, it’s similar: you want it to remember what the user already asked and what answers it gave.

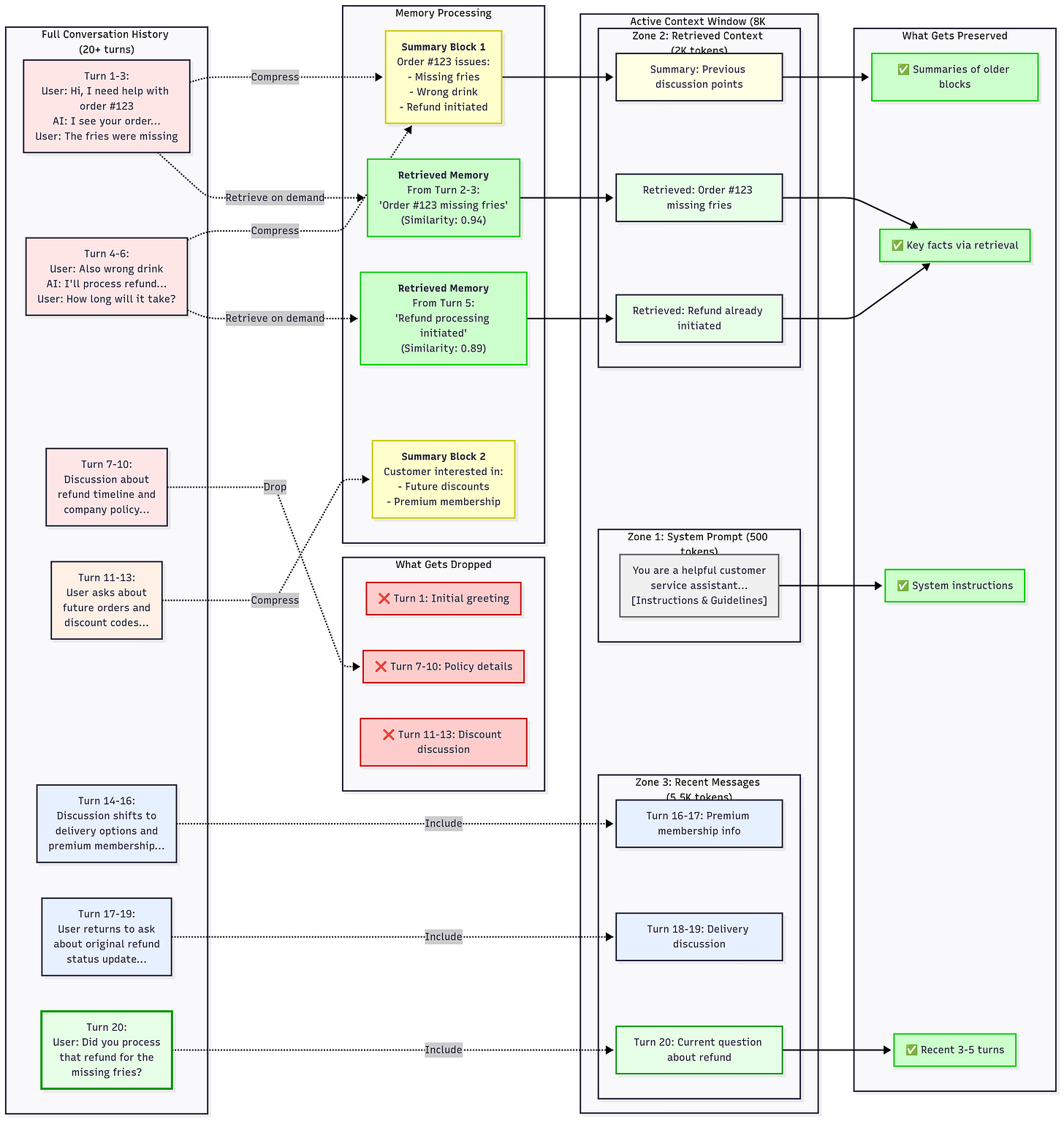

Episodic memory (like human memory, but better): One solution is to implement episodic memory for the AI. Think of splitting the conversation into episodes or chunks and summarizing past ones. For example, after 10 turns, you generate a summary of the conversation so far (or the important points) and use that going forward instead of the full history. This is akin to how humans remember key points of a long discussion, not every sentence. There are known strategies:

- Summary memory: Every few turns, produce a concise summary and include that in the prompt instead of raw transcript.

- Message retrieval memory: This is cool – treat past dialogue as knowledge chunks, embed them, and when context is needed, retrieve relevant past utterances (yes, RAG on the conversation itself). So if 30 turns ago the user mentioned something now relevant, the system can fetch that line rather than hoping it’s still in context. This is like context-aware memory retrieval.

At its core, you can maintain a vector store for conversation history. We call this a “long-term memory” module. As the chat goes on, store each user and assistant message embedding. When new question comes, you can retrieve past messages that seem related to the new query and prepend them as context. This way, even if the conversation spans 100 turns, the AI can recall specific details from earlier by retrieving them as needed. It’s like an AI having selective photographic memory: it doesn’t hold everything in immediate view, but it can search its memory for relevant bits.

Context window hacks: Besides retrieval-based memory, there are some hacks to fit more into the context window:

- Truncation strategy: Always drop the oldest turns once you near the limit (not great if user refers back to something older).

- Prioritize important content: e.g., keep system instructions and last user question, maybe summary of rest.

- Use larger context models for summarizing smaller context models: For instance, use GPT-4 32K to manage summarization that GPT-3.5 can’t hold, etc. But that’s advanced interplay.

With Anthropic’s models boasting 100K token context now and likely million-token contexts on the horizon , one might say “why bother summarizing, just use a bigger model!” True, bigger windows alleviate some need for memory tricks – but they aren’t infinite and come with higher cost and slower performance. So memory techniques will remain useful.

A real example: DoorDash (just a hypothetical scenario to illustrate) – suppose DoorDash has a support chatbot that helps with live customer orders. They want it to remember your entire conversation (“Did the agent already ask for my order ID five messages ago?”). They might use an episodic memory: each time you provide info like order ID, it’s stored in a slot; if you mention a restaurant name earlier and later say “the restaurant messed up my order”, the bot should recall which restaurant – that could be done by simply including previous user messages in context until it can’t, then summarizing “User’s order from McDonald’s had an issue…”. Anecdotally, an AI support bot forgetting earlier details leads to repetition and frustration, so solving conversation memory is crucial. Many production systems use a combination: keep recent turns verbatim (for recency), use a summary for older turns, and even incorporate retrieval for specific facts from the dialogue history.

Short-term vs Long-term memory: In human terms: short-term is like the scratchpad of what’s actively being discussed (the last few exchanges), long-term is everything that happened before that you might need to recall if context shifts back. RAG gives an LLM a form of long-term memory by hooking into an external knowledge base. We can extend that concept to conversation – the knowledge base in this case is the conversation transcript itself. In fact, some research works use the same RAG pipeline for conversation: treat the entire dialogue as a growing document. But more practically, we maintain separate memory vector indexes for conversation history vs. general knowledge.

Context window optimization analogy: “Fitting an encyclopedia on a Post-it note.” If you only have a 4K-token Post-it (context), you can’t fit the whole company handbook. But RAG lets you include just the relevant parts (like copying the needed lines from the encyclopedia onto the Post-it). For conversation, memory management is like continuously updating that Post-it with the most pertinent facts from the chat so far. Summaries and retrieval act as our compression techniques.

One approach known in literature is Hierarchical Memory: maintain multiple levels of abstraction (immediate last utterances full text, then a summary of older stuff, etc.).

Don’t forget, the model’s own weights are a kind of long-term memory too – the base knowledge from pretraining. It “knows” common sense and some general facts. RAG complements that with specific data. So when we say short vs long-term memory in AI:

- Long-term (in context of RAG) = persistent knowledge base (could be documents, or conversation history stored externally).

- Short-term = the active context window content.

DoorDash example extended: The bot remembers entire history: customer says at turn 2, “My order #123 was missing fries.” At turn 15, they say “I never got one item”. The bot should recall “fries were missing” – ideally it does. If the conversation is long, that initial statement might have dropped out of context. But if the bot stored that fact, it can retrieve it. One can imagine a memory retrieval: user asks “Can I get a refund for that missing item now?” The bot’s system retrieves earlier message about “missing fries” and then answers: “Yes, I see your fries were missing – I’ve issued a refund for those.” This level of continuity is achievable with RAG-memory.

In summary, RAG isn’t only for external knowledge, it can be for the conversation itself. Proper memory management (short-term by window, long-term by retrieval) ensures your AI doesn’t suffer dementia in long chats. The result: dialogues that feel coherent and contextually aware from start to finish. And as context window sizes grow (hey GPT-4-32k, and Claude’s 100k), we get more breathing room – but good memory techniques will always improve efficiency and capability, especially as we push toward multi-hour or continuous conversations (think AI personal assistants that chat with you over weeks).

Next, how do we know our RAG system is actually working well? We need to evaluate and test it – that’s our next section.

Evaluation and Testing: Measuring What Matters

So you’ve built a RAG system. How do you know it’s good? We can’t just trust our gut or a few anecdotal successes – we need solid evaluation. This section covers the key metrics that predict success, how to create an evaluation dataset, and strategies like A/B testing and feedback loops to continuously improve your RAG.

The 4 metrics that predict success:

- Relevance (Recall at K): Are we retrieving the right stuff? This is usually measured by something like Recall@K – the percentage of queries for which the relevant document is in the top K retrieved. If your knowledge base has ground-truth answers, you check if those were included. For example, if a user asks “What’s the refund period?”, and the correct doc chunk about refunds was retrieved in top 3 results, that’s a hit. You want high recall so the needed info is almost always fetched. If your retriever isn’t getting relevant content, the generator can’t answer correctly. Thus, relevance of retrieval is metric #1.

- Faithfulness (Groundedness): Is the answer actually based on the retrieved sources, or is the model hallucinating extra details? An answer is faithful if every claim it makes can be traced to provided context. One way to measure: have human raters label answers as “supported by source vs. not”. Or automatically, one can check if answer sentences overlap enough with source text. Faithfulness is crucial – a RAG system that retrieves correctly but then the LLM ignores it and makes something up fails the point. Metrics like “Self-Consistency” or using a smaller model to verify facts can be used. In research, sometimes they measure the percentage of answers with at least one correct citation (as a proxy).

- Answer quality (Usefulness/Accuracy): This is the end-to-end quality – would a human judge the answer as correct, complete, and directly answering the question? This is a bit subjective, but you can operationalize it via a test set: e.g., 100 questions with gold answers (could be written by experts or from an FAQ). Run the system and compare answers to gold – measure accuracy or use F1 if partial. Another angle: have human evaluators rate answers 1-5 on satisfaction. This metric is holistic – it factors in retrieval and generation and readability.

- Latency: All the goodness above doesn’t matter if it’s too slow for users. Latency is critical for real user experience. We often look at p90 or p95 latency (the 90th/95th percentile response time) – meaning the slowest typical responses. If p95 latency is, say, 4 seconds, that means 19 out of 20 responses come in under 4s, but 5% of queries take longer (maybe a big retrieval or an agent loop). Depending on your use case, you might target sub-1s for live chat, or maybe a few seconds is acceptable for complex analysis. Either way, monitor it. There’s also cost (not a “user metric” but business metric) – if your RAG calls an expensive model, you measure cost/query. Latency and cost often trade off with using bigger models or doing reranking.

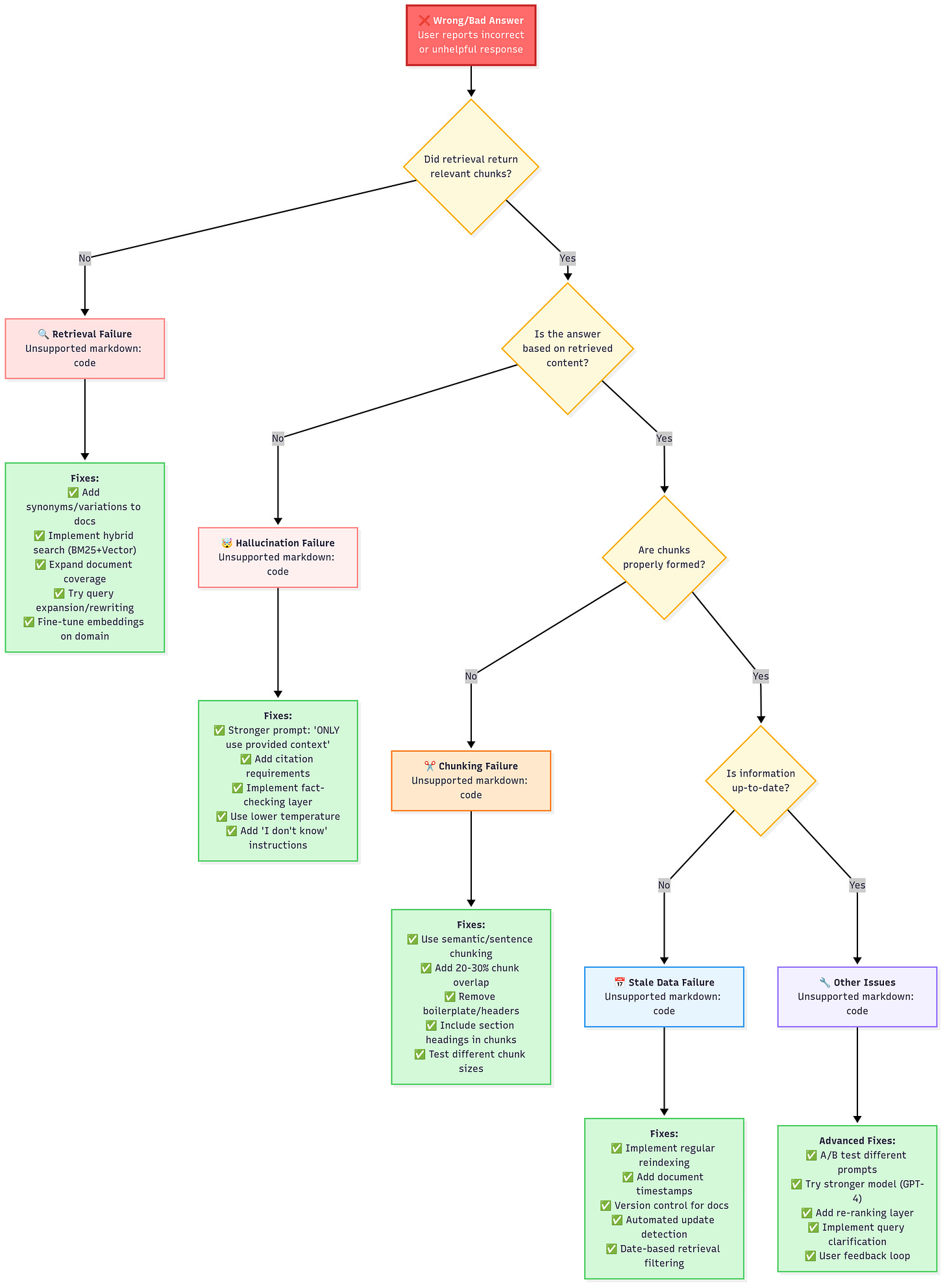

Other useful metrics include Precision of retrieval (are retrieved docs actually relevant vs. bringing some irrelevant stuff), Hallucination rate (inverse of faithfulness – how often does it make unsupported claims), and Coverage (if a question has multiple points, did answer cover them all?). But to keep it simple, the four above capture major aspects: did we get the info, did we ground the answer, was the answer good, and was it fast.

Now, how to actually measure these? You need an evaluation dataset. Ideally, a set of sample questions (representative of what users will ask) along with ground-truth references or expected answers. Often this is ~50–100 questions for a small system, but for robust eval maybe a few hundred. Building this “gold set” is an investment, but hugely worth it. It’s your yardstick.

Building your evaluation dataset – the 100-question gold standard: Start by collecting real queries if available (like search logs, customer questions). If none exist, brainstorm likely questions or have domain experts generate them. Then for each question, determine what the correct answer or document is. For instance, if question is “How many days do I have to return a product?”, the gold reference might be “Returns are accepted within 30 days of purchase” from ReturnPolicy.doc. If you can, actually have a human write the ideal answer (with sources noted). If not, at least mark which document/section contains the answer. This way you can evaluate retrieval (did it retrieve ReturnPolicy.doc?), and generation (did it say 30 days?).

It’s often helpful to include some tricky cases: ambiguous questions, multi-part questions, etc., to see how system handles them. Once you have this dataset, run your RAG system on it and measure:

- Retrieval recall: what % of answers had the correct doc in top 3 retrieved? (Use your gold references).

- Answer accuracy: compare the system’s answer to the gold answer (could be exact match for factual, or BLEU score or a simple correctness check).

- If possible, manual review a subset to label hallucinations or irrelevant content.

A/B Testing RAG: When you make improvements, you’ll want to A/B test to prove ROI. For example, you launch RAG bot version A (maybe the old system was a static FAQ or a non-RAG model) vs. version B (with RAG). Define success metrics – maybe deflection rate (questions answered without human agent), user satisfaction (collected via thumbs-up/thumbs-down), or simply accuracy on sample queries. Run both versions (e.g., route a portion of traffic to each) and compare. Say LinkedIn implemented RAG and found support resolution time dropped 28.6% – that’s a concrete ROI metric. To A/B test internally, you can simulate by splitting your evaluation questions and answering them with old vs new system, have judges blind-score which answers are better. If 9/10 times RAG’s answer is better, you have a winner.

A/B testing is not just for accuracy, but also can test latency/cost trade-offs. E.g., “Is using GPT-4 (which is slower) giving significantly better answers than GPT-3.5 for our domain?” You might run eval with both and find maybe a slight quality uptick but double latency – then you decide if that’s worth it. Only good evaluation data and testing will tell.

The feedback loop: Using production data to improve continuously. Once your system is live, you’ll get real interactions. Put hooks to capture useful signals:

- Let users rate answers (thumbs up/down or “Did this answer your question? Yes/No”). This is invaluable supervised data. Every thumbs-down with the conversation and answer is a training example of what went wrong. Did retrieval fail or did the model hallucinate? You can label these and use them to refine either component (e.g., fine-tune the LLM to be more cautious, or improve indexing).

- Track what users do after the answer. If they immediately rephrase the question or go browsing the document link, maybe the answer wasn’t satisfactory or complete. That can be an implicit signal.

- Log all queries that got “I don’t know” or low-confidence answers. Later, check if perhaps answers exist in your data but were missed. If so, why missed? Maybe add that phrasing to document text or adjust embedding parameters.

Teams often set up a regular review of failed cases. For instance, Notion’s team might have taken transcripts of cases their AI missed and then updated their index or prompts accordingly. Over a few months, this iterative loop improved their RAG accuracy from, say, 72% to 94% (hypothetical numbers, but plausible given iterative improvements).

One case study: Notion (per reports) improved their answer accuracy dramatically by analyzing where the chatbot failed to retrieve correct pages and adding better metadata and new training for those cases. They basically treated it like model fine-tuning, but in retrieval space – adjusting data when the system was off. The result was an increase from mediocre performance to near expert-level answer quality over a quarter. The moral: systematic evaluation + feedback loop = rapid improvement.

To implement feedback: use a tool like RAGAS (Retrieval-Augmented Generation Assessment System) or custom scripts to evaluate logs. RAGAS provides metrics like answer accuracy and hallucination detection with LLM judges. Some companies pipe conversation logs into an evaluation pipeline nightly – e.g., run an LLM offline to score each answer for correctness when possible, to identify issues proactively.

Finally, to prove ROI to stakeholders, tie these metrics to business outcomes. E.g., after deploying RAG, support ticket volume dropped by X%, customer satisfaction on help answers rose Y points. Or employees find info 2x faster (maybe measure by a before/after experiment). These concrete wins justify the investment and guide further funding.

In summary, evaluate early, evaluate often. Use a diverse set of metrics: retrieval quality, answer faithfulness, user-level success. Build a gold test set of at least 100 Q&As – it will act as your compass. Then continuously A/B test improvements and incorporate real user feedback. With this discipline, you’ll avoid falling into the trap of anecdotal “it seems to work” and instead know how well it works and where to improve. That’s how you push from a decent prototype to a reliable production system. Next, let’s talk about scaling that system up to enterprise level – where millions of dollars might be on the line.

Enterprise RAG: When Millions Are on the Line

Building a small RAG demo is one thing; deploying it across a Fortune 500 enterprise is another beast entirely. In an enterprise setting, stakes are high – mistakes can cost millions or tarnish reputation. Let’s explore the challenges (and solutions) that arise when scaling RAG to enterprise level: horror stories to avoid, how to handle millions of queries, keeping data secure/compliant, and optimizing costs.

The “Frankenstein RAG” horror story (and how to avoid it): Picture a patchwork of AI components: one team built a vector index, another wired up a chatbot UI, a consultant added a custom reranker, and nobody thought through the overall architecture. The result? A monster that’s hard to maintain, with inconsistent answers and mysterious failures – a Frankenstein RAG. One enterprise recounted how their first attempt at an AI assistant integrated 5 different services (some on-prem, some cloud) with brittle connections. It worked in demos, but under load it collapsed – context dropouts, timeouts between components, and absolutely no single source of truth for debugging. To avoid this, design an end-to-end architecture early. Decide: will you use an all-in-one solution (some vendors now offer full RAG platforms), or a carefully orchestrated pipeline? Document how data flows and where each piece lives. Ensure observability – logs at each stage (retrieval logs, LLM input/output logs). Frankenstein systems often die because no one can tell which stitch ripped when it fails.

Scaling from 10 to 10 million queries: When your query volume grows, watch out for two bottlenecks: the vector database and the LLM API. Vector DBs, if self-hosted, might need sharding or upgrades – e.g., going from a single node to a cluster. Many solutions can scale to millions of vectors (Milvus, Elastic, etc., scale horizontally), but queries per second (QPS) is the real kicker. If you expect, say, 100 QPS, ensure your DB can handle that with <50ms each. Sometimes that means adding replicas (multiple instances serving the same index) to share query load. Meanwhile, LLM throughput might be a limit – calling an API like OpenAI for each query might get expensive or slow. Enterprises often implement caching: if the same question gets asked often, cache the answer. Or use a smaller local model for some queries. One advanced approach is a cascading model deployment: try answering with a fine-tuned 7B model internally; if it’s confident, respond, if not, fall back to GPT-4. This saved one company 30% of costs, for example. Another scaling aspect is monitoring performance – with millions of queries, even a 99% accuracy means thousands of bad answers. Logging and automated alerts (like if accuracy on a sliding window of queries dips below X, flag it) can catch issues quickly.

Security deep-dive: Enterprises care deeply about data security and compliance (HIPAA for health data, SOC2, GDPR in EU, etc.). RAG systems must be designed so that sensitive data doesn’t leak. Some considerations:

- Access controls: If different users should only see certain data, the RAG needs to filter retrieval by permissions. For example, an employee asking about HR policy can see internal policies, but a client using a chatbot should not retrieve those. That means integrating your vector store with an ACL (access control list) – for instance, include user roles in metadata and filter query results accordingly.

- PII scrubbing: If logs might contain personal data (names, addresses), you should either avoid logging full text or have a process to scrub them for analysis. Similarly, when feeding content to external APIs (OpenAI, etc.), you might need to avoid sending truly sensitive info unless you have agreements in place. Solutions include using on-prem LLMs for highly sensitive data, or at least encrypting certain fields.

- Retention and Right to be Forgotten (GDPR): If a user deletes their data, and that data was in the knowledge base, you have to remove it from the index too. This means building a mechanism to update or delete vectors. Many vector DBs support deletion by ID – so track which chunk IDs correspond to, say, a user’s data, and be able to wipe them. Also re-chunk and re-index periodically for content updates.

- Auditability: Who asked what and got what answer? Enterprises might need logs that show the chain: user query -> docs retrieved -> answer. If an answer is challenged legally (“Your AI gave wrong financial advice!”), you need to reproduce what it saw and why it answered that way. Storing query and retrieval traces with timestamps is thus important.

- Model filtering: Use the model’s tools too – e.g., OpenAI has moderation APIs; you can run the final answer through that to ensure no disallowed content. If building your own model, have toxicity filters etc., in place. This prevents an malicious user from getting the AI to spill secrets or produce harassment by carefully poisoning a query.

A big reassurance: RAG can actually help with compliance compared to raw LLMs. Because the AI is constrained to use provided data, it’s less likely to wander into areas it shouldn’t. Also, you can ensure that, say, medical AI only references approved medical literature – reducing risk of non-compliant advice.

Cost optimization: RAG can reduce costs by answering with smaller models or fewer API calls, but it can also introduce its own costs (vector DB hosting, embedding generation, etc.). An interesting case: one company was using GPT-4 for everything at maybe $0.06 per query. They realized many queries are simple FAQs that GPT-3.5 or even a fine-tuned smaller model could handle. They implemented a two-tier system: attempt answer with GPT-3.5 (cost $0.002) along with retrieved context. Only if certain uncertainty triggers are hit (like low similarity score or user follow-up suggests dissatisfaction) do they escalate to GPT-4 for a refined answer or second attempt. This saved about 90% of their previous costs, which over millions of queries was about $2M/year in savings, while keeping answer quality high (the trick was tuning the handoff threshold carefully). This is akin to a “cascading model deployment” I mentioned.

Another tactic: optimize embeddings. Calling OpenAI’s embed API for each new doc chunk can add up. Some open-source embedding models (like InstructorXL) can be run one-time to embed all docs in-house, saving money at the expense of requiring some GPU compute. For ongoing usage, ensure you only embed new/changed content, not re-embed everything needlessly.

Case study: RBC revolutionizing banking support with RAG. The Royal Bank of Canada (RBC) developed Arcane, an internal Retrieval-Augmented Generation (RAG) system designed to help specialists quickly locate relevant investment policies across the bank’s internal platforms. Arcane indexes policy documents and other semi-structured data, such as PDFs, HTML, and XML, and uses advanced embedding models to enable precise retrieval of information. This system significantly improves productivity by allowing specialists to find complex policy details in seconds, streamlining access to information that previously took years of experience to master. The development of Arcane involved addressing challenges related to document parsing, context retention, and security, including robust privacy and safety measures to protect proprietary financial information. The system’s success demonstrates how AI can enhance decision-making and operational efficiency in large financial institutions

This illustrates how an enterprise might integrate RAG internally first (for employees). Many do that to mitigate risk vs. a public-facing bot. Then gradually, as confidence grows, they roll out to customers. Morgan Stanley, for instance, built a GPT-4 RAG on their wealth management knowledge base (internal) to assist advisors – a similar idea of revolutionizing support with verified info.

In enterprise, also think about fallbacks: If the AI isn’t confident, have a graceful handoff (e.g., “I’m not sure, let me connect you to a human.”). Not every query should be answered by AI if it’s risky.

Finally, consider that enterprise RAG is a team sport: involve IT for data pipelines, involve legal for compliance, involve domain experts to curate content. It’s not just a lab experiment – it touches many facets of the business. With careful design, you’ll avoid the Frankenstein and instead get a robust, scalable, secure RAG deployment that your whole company trusts.

Next up: we’ll compare RAG to another rising approach – Agentic search – and discuss when to use which, and how they might converge.

RAG vs Agentic Search: The $40 Billion Question

There’s a hot debate in the AI world: should you use Retrieval-Augmented Generation (RAG) or an AI Agent that can search and reason on its own (often called Agentic search, like AutoGPT or similar systems)? They have different strengths. Let’s break down the fundamental difference, some performance considerations (85% vs 95% accuracy type of trade-off), and a decision framework for when to use each – and glimpse into a future where they merge.

RAG retrieves and answers; Agents think, plan, then answer. In essence, a RAG system is like a smart lookup combined with an answer generator. It’s relatively straightforward: given a query, it retrieves relevant info and produces an answer grounded in that info. An agentic approach (like the ReAct pattern or say a tool-using agent) will treat the query as a task it needs to solve possibly through multiple steps: it might search (multiple times even), analyze intermediate results, maybe use a calculator or call an API, and finally give an answer. The agent has a kind of mini-planner built in (often the LLM itself does the planning via prompts like “Thought: I should search X… Action: search… Observing results… Thought: now I got this, final answer…”).

So difference: RAG = single retrieval round then answer; Agent = could be multiple retrievals + reasoning steps. Agents shine when a query is complicated or requires combining info from different places. Example: “Compare the growth of Apple vs Microsoft in the last quarter and give me a trend” – an agent might do two searches, get data for Apple, get data for Microsoft, maybe do a quick calculation or summary, then answer. A single-shot RAG might struggle to gather all that in one go (unless the info is conveniently in one doc).

Performance shootout: 85% vs 95% (but at what cost?). Let’s say on a certain set of complex questions, a basic RAG system gets ~85% accuracy – it fails on multi-hop or when reasoning is needed across docs. An agentic approach (which can plan, do multi-hop retrievals, use a calculator etc.) might achieve 95% accuracy on those because it can do more steps. However, the cost is typically latency and complexity: that 95% might come with, say, an average of 5 tool calls (searches or calculations), each adding latency. So maybe the agent’s answers take 10 seconds instead of 1 second. Also each step might call an API (cost), and it’s harder to guarantee what the agent will do (could go down a rabbit hole or get stuck).

There’s also a reliability factor – RAG is relatively deterministic (retrieve best match and answer), whereas agents have more moving parts that can go wrong (like choosing a wrong search query and never finding the right info, or looping). Some academic evaluations have noted that while agents can theoretically solve more, they sometimes fail in unpredictable ways, making their overall reliability not clearly higher than a well-tuned RAG on simpler tasks. Think of it like: RAG is a bicycle – simple, robust; an Agent is a car – can go further and faster, but more ways to break down.

When to use each approach:

- Use RAG when your queries are well-covered by existing knowledge bases and typically only need one round of retrieval. If the task is primarily Q&A or straightforward decision support where the needed info is easily identified with keywords, RAG is efficient and less error-prone. RAG is also usually easier to implement and cheaper to run. For instance, a documentation chatbot or a legal assistant that fetches relevant laws – those work great with RAG because one query = find relevant clause = answer.

- Use an Agentic approach when tasks are more complex, like those involving:

- Multi-step reasoning: e.g., “First find X, then use X to get Y”.

- Tool use beyond text retrieval: e.g., need to call an API, do math, interact with the environment. Agents can interface with calculators, databases, or even execute code.

- Exploratory search: where the query isn’t well-defined. An agent can search iteratively, refining the query like a human researcher might.

- Planning tasks: not just answering a question, but deciding a sequence of actions (like booking travel given constraints – search flights, compare, etc.).

However, if you can achieve the goal with a simpler RAG, do it – because agents bring overhead.